Tag: music

-

Musik som om det vore 1999

I mars 1999 startades en onlinelabel för elektronisk musik. Det var i Umeå och vi som drev den, Fredrik Mjelle och Sakarias Wangefjord, var båda djs i ett gäng som hade ordnat klubbar och konserter på olika ställen sedan några år tillbaka. En typisk kväll: Sakarias körde Drum n bass på dansgolvet, Erik Hedström och…

-

I AM SAFE SECURE ENCOURAGED

Sidoprojekt: Labeln Ausland i Portland släpper skiva i samarbete med Earth People. Viktor hos oss har gjort typografi ovanpå LA-designern Lenore Melos bilder, och Fredrik hos oss producerar musiken under namnet Beem. På skivan finns vokala genier som Bahamadia, Philadelphia-legenden som är en av de fetaste klassiska rösterna inom hiphop öht, jobbat med DJ Premier,…

-

Blipp Blopp för barn

Förra året gjorde vi ett kul sidoprojekt som inte nämnts här: Blipp Blopp för barn – som blev grammisnominerad som “Bästa barnalbum”! Barn behöver roligare kultur, och vad är roligare än pruttande syntar, fula röstljud och robotdans. Blipp Blopp för barn är en elektronisk barnskiva släppt på vinyl och digitalt, Earth People har agerat skivbolag. Andreas gjorde omslagsballonger…

-

Klubben Webbklubben

Jag kom på nu att vi ju kört rätt fina afterworks hos oss i gamla stan, i flera år, och glömt berätta om dessa. Om du känner oss sedan tidigare kanske du varit här, annars är du välkommen i framtiden. I sin enkelhet bjuder vi på öl och bokar en artist vi lyssnat på. Det…

-

Quiz buttons in node.js

For our recent fall party we made a music quiz, and if you’re quizzin you gotta have some buttonpressin. We looked for quiz buttons at teknikmagasinet, surprisingly we didn’t find anything. So www what do you have to offer? Nothing? Well ok, let’s build some buttons in node.js. Real time web! 5 teams, connected to…

-

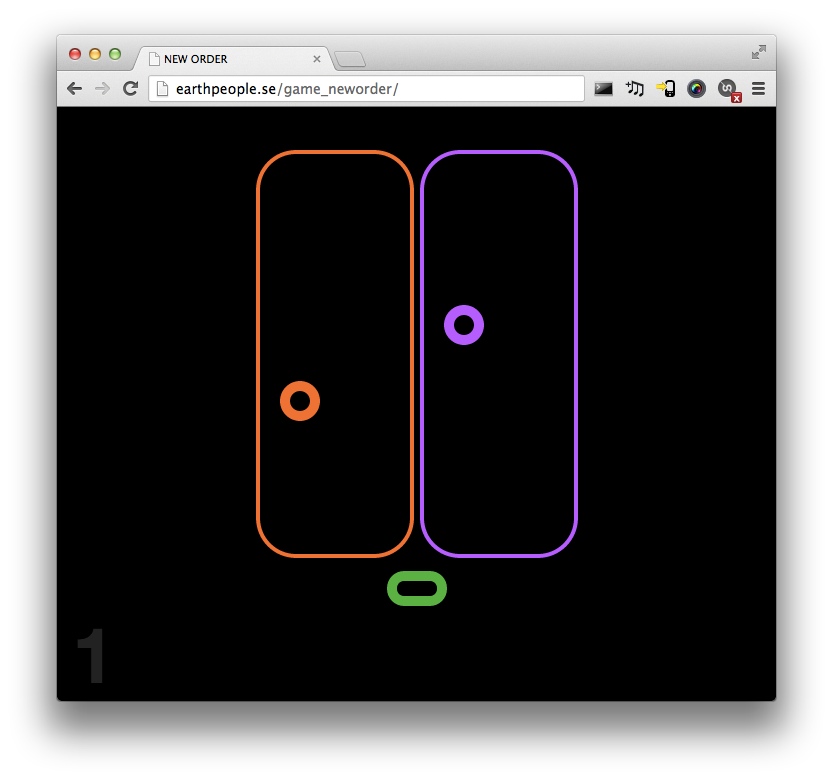

Web audio music game

After we did the rhythm experiment at Music Hackday we fooled around a bit more with the web audio synthesizer. It’s pretty amazing that they got a built in synth in Chrome, and if you got some knowledge of synthesizers the different audio experiments out there (googles own minimoog for example) actually seems pretty straight forward,…

-

Our #musichackday hack – Stealing Feeling!

UPDATE: We actually won an iPad Mini courtesy of Echonest for the hack! Very encouraging! Me, Fredrik and Adrian attended the Music Hackday in Stockholm, and made a little something. In short: 1. Steal the feeling from someone who’s got more of it than you. 2. Apply it to your music. It’s based around a 16…

-

Line-out scrobbler – when DJ’ing

When DJ’ing at Debaser Slussen yesterday, we decided to hook up the Line out scrobbler to the DJ mixer. I knew that Echonest wouldn’t be able to resolve all weird stuff we play, but was hoping for at least 70% success rate. Unfortunately we had a resolve rate of about 20%, which make our little…

-

Line-out scrobbler

for years i’ve been trying to hijack music recognition services like shahzam to be able to recognize music. i’ve finally got this working thanks to the fine guys at echonest, who kindly provide a proper api for this. my proof of concept is running on a spare macbook. here’s how it works on the mac…

-

NOW On Roskilde

Now On Roskilde is a mobile application which users easily can add to their homescreen. It shows what’s on right now, together with related artists playing in the near future. Since nothing is playing right now (+ the correct feed hasn’t been published yet), the app is pretty worthless at the moment. There is a…

-

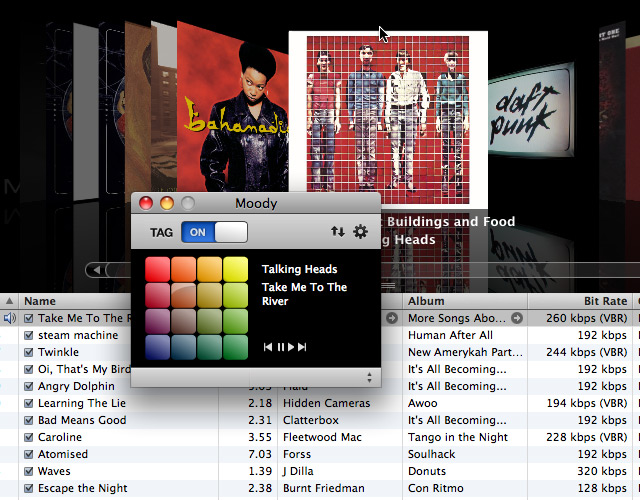

Extracting mood data from music

We have provided data from the Moody database to a group of researchers in the Netherlands, who have been doing some really interesting work. Menno van Zaanen gives us a report: As a researcher working in the fields of computational linguistics and computational musicology, I am interested in the impact of lyrics in music. Recently,…

-

SimpleSong 0.2

SimpleSong is becoming quite an iTunes competitor, in it’s own way. I’ve used it quite a bit myself, much more often than I thought I would. If you’re tired of dealing with a library, and want a library free music player with a minimalistic approach, lightweight, and close to zero startup time – this might…